If you develop applications that use untrusted input, you deal with validation. No matter which framework or library you are using. It is a common task. So I am going to share a recipe that neatly integrates validation layer with business logic one. It is not about what data to validate and how to validate they, it is mostly about how to make code looks better using Python decorators and magic methods.

Let’s say we have a class User with a method login.

class User(object): def login(self, username, password): ...

And we have a validation schema Credentials. I use Colander, but it does not matter. You can simply replace it by your favorite library:

import colander class Credentials(colander.MappingSchema): username = colander.SchemaNode( colander.String(), validator=colander.Regex(r'^[a-z0-9\_\-\.]{1,20}$') ) password = colander.SchemaNode( colander.String(), validator=colander.Length(min=1, max=100), )

Each time you call login with untrusted data, you have to validate the data using Credentials schema:

user = User() schema = Credentials() trusted_data = schema.deserialize(untrusted_data) user.login(**trusted_data)

The excessive code is a trade-off for flexibility. Such methods are also can be called using trusted data. So we can’t just put validation into the method itself. However, we can bind the schema to the method without loss of flexibility.

Firstly, create validation package using the following structure:

myproject/

__init__.py

...

validation/

__init__.py

schema.py

Then add the following code into myproject/validation/__init__.py (again, usage of cached_property is inessential detail, you can use the same decorator provided by your favorite framework):

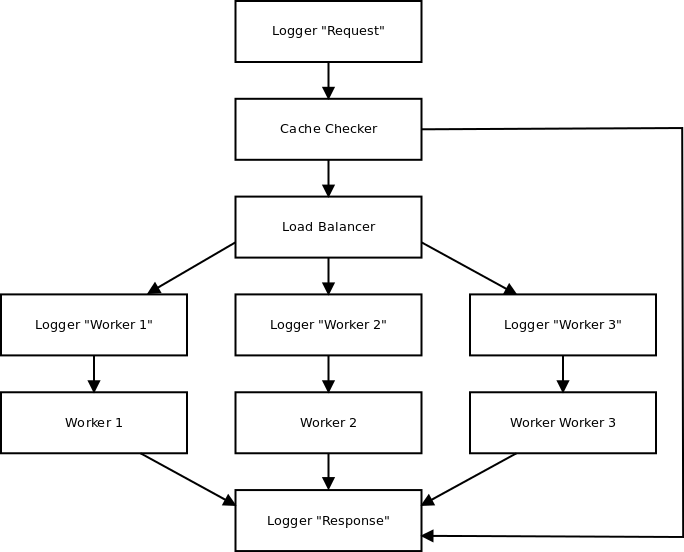

from cached_property import cached_property from . import schema def set_schema(schema_class): def decorator(method): method.__schema_class__ = schema_class return method return decorator class Mixin(object): class Proxy(object): def __init__(self, context): self.context = context def __getattr__(self, name): method = getattr(self.context, name) schema = method.__schema_class__() def validated_method(params): params = schema.deserialize(params) return method(**params) validated_method.__name__ = 'validated_' + name setattr(self, name, validated_method) return validated_method @cached_property def validated(self): return self.Proxy(self)

There are three public objects: schema module, set_schema decorator, and Mixin class. The schema module is a container for all validation schemata. Place Credentials class into this module. The set_schema decorator simply adds passed validation schema to decorating method as __schema_class__ attribute. The Mixin class adds proxy object validated. The object provides access to the methods with __schema_class__ attribute and lazily creates their copies wrapped by validation routine. This is how it works:

from myproject import validation class User(object, validation.Mixin): @validation.set_schema(validation.schema.Credentials) def login(self, username, password): ...

Now, we can call validated login method within a single line of code:

user = User() user.validated.login(untrusted_data)

So what we get: the code is more compact; it is still flexible, i.e. we can call the method without validation; and it is more readable and self-documenting.